This article was first published in the June 2010 issue of the magazine Testing Experience.

HTTP-level tools record HTTP requests on the HTTP protocol level. They usually provide functionality for basic parsing of HTTP responses and building of new HTTP requests. Such tools may also offer parsing and extraction of HTML forms for easier access to form parameters, automatic replacement of session IDs by placeholders, automatic cookie handling, functions to parse and construct URLs, automatic image URL detection and image loading, and so on. Extraction of data and validation are often done with the help of string operations and regular expressions, operating on plain HTTP response data. Even if HTTP-level tools address many load testing needs, writing load test scripts using them can be difficult.

Challenges with HTTP-Level Scripting

Challenge 1: Continual Application Changes

Many of you probably know this situation: A load test needs to be prepared and executed during the final stage of software development. There is a certain time pressure, because the go-live date of the application is in the near future. Unfortunately, there are still some ongoing changes in the application, because software development and web page design are not completed yet.

Your only chance is to start scripting soon, but you find yourself struggling to keep up with application changes and adjusting the scripts. Some typical cases are described below.

- The protocol changes for a subset of URLs, for example, from HTTP to HTTPS. This could happen because a server certificate becomes available and the registration and checkout process of a web shop, as well as the corresponding static image URLs, are switched to HTTPS.

- The URL path changes due to renamed or added path components.

- The URL query string changes by renaming, adding or removing URL parameters.

- The host name changes for a subset of URLs. For example, additional host names may be introduced for a new group of servers that delivers static images or for the separation of content management URLs and application server URLs that deliver dynamically generated pages.

- HTML form parameter names or values are changed or form parameters are added or removed.

- Frames are introduced or the frame structure is changed.

- JavaScript code is changed, which leads to new or different HTTP requests, to different AJAX calls, or to a new DOM (Document Object Model) structure.

In most of these cases, HTTP-level load test scripts need to be adjusted. There is even a high risk that testers do not notice certain application changes, and although the scripts do not report any errors, they do not behave like the real application. This may have side effects that are hard to track down.

Challenge 2: Dynamic Data

Even if the application under test is stable and does not undergo further changes, there can be serious scripting challenges due to dynamic form data. This means that form field names and values can change with each request. One motivation to use such mechanisms is to prevent the web browser from recalling filled-in form values when the same form is loaded again. Instead of “creditcard_number”, for example, the form field might have a generated name like “cc_number_9827387189479843”, where the numeric part is changed every time the page is requested. Modern web applications also use dynamic form fields for protection against cross-site scripting attacks or to carry security-related tokens.

Another problem can be data that is dynamically changing, because it is maintained and updated as part of the daily business. If, for example, the application under test is an online store that uses search-engine-friendly URLs containing catalog and product names, these URLs can change quite often. Even worse, sometimes the URLs contain search-friendly catalog and product names, while embedded HTML form fields use internal IDs, so that there is no longer an obvious relation between them.

Session IDs in URLs or in form fields may also need special handling in HTTP-level scripts. The use of placeholders for session IDs is well supported by most load test tools. However, special script code might be needed, if the application not only passes these IDs in an unmodified form, but also uses client-side operations on them or inserts them into other form values.

To handle the above-mentioned cases, HTTP-level scripts need manually coded, and thus unfortunately also error-prone, logic.

Challenge 3: Modeling Client-Side Activity

In modern web applications, JavaScript is often used to assemble URLs, to process data, or to trigger requests. The resulting requests may also be recorded by HTTP-level tools, but if their URLs or form data change dynamically, the logic that builds them needs to be reproduced in the test scripts.

Besides this, it can be necessary to model periodic AJAX calls, for example to automatically refresh the content of a ticker that shows the latest news or stock quotes. For a realistic load simulation, this also needs to be simulated by the load test scripts.

Challenge 4: Client-Side Web Browser Behavior

For correct and realistic load simulations, the load test tool needs to implement further web browser features. Here are a few examples:

- Caching

- CSS handling

- HTTP redirect handling

- Parallel and configurable image loading

- Cookie handling

Many of these features are supported by load test tools, even if the tools act on the HTTP level, but not necessarily all of them are supported adequately. If, for example, the simulated think time between requests of a certain test case is varied, a low-level test script might always load the cacheable content in the same way – either it was recorded with an empty cache and the requests are fired, or the requests were not recorded and will never be issued.

DOM-Level Scripting

What is the difference between HTTP-level scripting tools and DOM-level scripting tools? The basic distinction between the levels is the degree to which the client application is simulated during the load test. This also affects the following characteristics:

- Representation of data: DOM-level tools use a DOM tree instead of simple data structures.

- Scripting API: The scripting API of DOM-level tools works on DOM elements instead of strings.

- Amount and quality of recorded or hard-coded data: There is much less URL and form data stored with the scripts. Most of this data is handled dynamically.

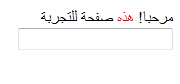

DOM-level tools add another layer of functionality on top. Besides the handling of HTTP, these tools also parse the contained HTML and CSS responses to build a DOM tree from this information, similar to a real web browser. The higher-level API enables the script creator to access elements in the DOM tree using XPath expressions, or to perform actions or validations on certain DOM elements. Some tools even incorporate a JavaScript engine that is able to execute JavaScript code during the load test.

Advantages

DOM-level scripting has a number of advantages:

- Load test scripts become much more stable against changes in the web application. Instead of storing hard-coded URLs or data, they operate dynamically on DOM elements like “the first URL below the element xyz” or “hit the button with id=xyz”. This is especially important as long as application development is still ongoing. As a consequence, you can start scripting earlier.

- Scripting is easier and faster, in particular if advanced script functionality is desired.

- Validation of result pages is also easier on the DOM level compared to low-level mechanisms like regular expressions. For example, checking a certain HTML structure or the content of an element, like “the third element in the list below the second H2” can be easily achieved by using an appropriate XPath to address the desired element.

- Application changes like changed form parameter names normally do not break the scripts, if the form parameters are not touched by the script. But, if such a change does break the script because the script uses the parameter explicitly, the error is immediately visible since accessing the DOM tree element will fail. The same is true for almost all kinds of application changes described above. Results are more reliable, because there are fewer hidden differences between the scripts and the real application.

- CSS is applied. Assume there is a CSS change such that a formerly visible UI element that can submit a URL becomes invisible now. A low-level script would not notice this change. It would still fire the old request and might also get a valid response from the server, in which case the mismatch between the script and the changed application could easily remain unnoticed. In contrast, a DOM-level script that tries to use this UI element would run into an error that is immediately visible to the tester.

- If the tool supports it, JavaScript can be executed. This avoids complicated and error-prone re-modeling of JavaScript behavior in the test scripts. JavaScript support has become more and more important in recent years with the evolution of Web 2.0/AJAX applications.

Disadvantages

There is one disadvantage of DOM-level scripting. The additional functionality needs more CPU and main memory, for instance to create and handle the DOM tree. Resource usage increases even more if JavaScript support is activated.

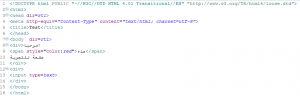

Detailed numbers vary considerably with the specific application and structure of the load test scripts. Therefore, the following numbers should be treated with caution. Table 1 shows a rough comparison, derived from different load testing projects for large-scale web applications. The simulated client think times between a received response and the next request were relatively short. Otherwise, significantly more users might have been simulated per CPU.

| Scripting Level |

Virtual Users per CPU |

| HTTP Level |

100..200 |

| DOM Level |

10..30 |

| DOM Level + JavaScript execution |

2..10 |

If you evaluate these numbers, please keep in mind that machines are becoming ever more powerful and that there are many flexible and easy-to-use on-demand cloud computing services today, so that resource usage should not prevent DOM-level scripting.

Conclusion

Avoid hard-coded or recorded URLs, parameter names and parameter values as far as possible. Handle everything dynamically. This is what we have learned. One good solution to achieve this is to do your scripting on the DOM level, not on the HTTP level. If working on the DOM level and/or JavaScript execution are not possible for some reason, you always have to make compromises and accept a number of disadvantages.

We have created and executed web-application load tests for many years now, in a considerable number of different projects. Since 2005, we have mainly used our own tool, Xceptance LoadTest (XLT), which is capable of working on different levels and supports fine-grained control over options like JavaScript execution. In our experience, the advantages of working on the DOM level, in many cases even with JavaScript execution enabled, generally by far outweigh the disadvantages. Working on the DOM level makes scripting much easier and faster, the scripts handle many of the dynamically changing data automatically, and the scripts become much more stable against typical changes in the web application.

Ronny Vogel is technical manager and co-founder of Xceptance. His main areas of expertise are test management, functional testing and load testing of web applications in the field of e-commerce and telecommunications. He holds a Masters degree (Dipl.-Inf.) in computer science from the Chemnitz University of Technology and has 16 years of experience in the field of software testing. Xceptance is a provider of consulting services and tools in the area of software quality assurance, with headquarters in Jena, Germany and a branch office in Cambridge, Massachusetts, USA.