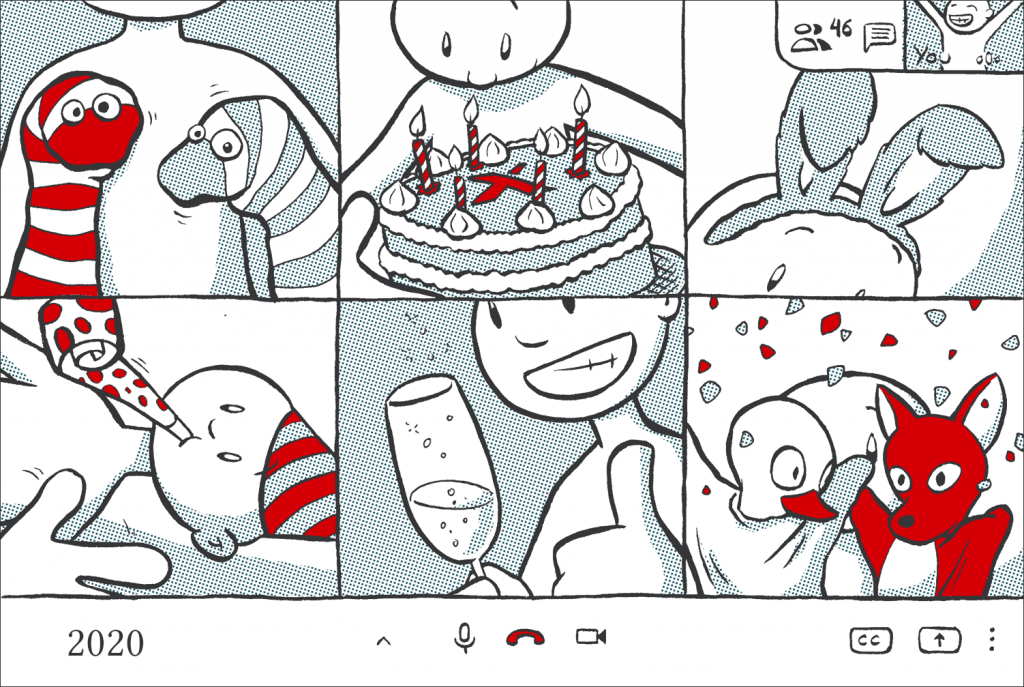

The little Ozobot speeds around the corner, flashing colorfully, winds its way along a spiral, collides briefly with one of its colleagues and finally finds its way through the maze. It is navigated to its destination by color sensors on its underside, which allow it to follow a defined route. The miniature robot was not programmed by a high-tech engineer, but by Magnus, 10 years old, and the son of our co-founder Simone Drill.

During a programming workshop, he learned to give commands to the Ozobot and to control it with the help of color codes. This was made possible by witelo e.V. from Jena, who offer working groups and experimentation courses at schools, as well as extracurricular learning formats. These scientific and technical learning venues were founded to promote so-called STEM education, with computer science as one of its components. Students learn the basics about coding, robotics and algorithms in research clubs, on hands-on days and during a wide range of vacation activities. In this context, Magnus also had the opportunity to playfully gain his first programming experience and took his enthusiasm from the workshop home with him.

Word of Witelo’s work quickly spread among the Xceptance staff, and numerous children followed Magnus’ example. Here, the offspring are following in the footsteps of their parents, who are also involved in programming, software testing and development at Xceptance.

“It’s close to our hearts that our children remain curious and have the chance to test their skills, so we would like to contribute to the dedicated work of the association with a donation”, says Simone Drill. “We want to spark young people’s excitement for IT topics and help them to gain perspectives for their career choice.”

With more than 80 network partners from research institutions, companies, associations and initiatives, witelo has established itself excellently in Jena over the past ten years. In addition, there are schools from Jena and the surrounding area, as well as many private individuals who enrich the educational landscape with their commitment. This positive development encourages managing director Dr. Christina Walther in her work: “Students should learn how important an understanding of science and technology is in their everyday lives – but also what great job opportunities the STEM subjects offer.” That’s why she stays in close contact with the parents of her course participants and promotes partnerships with companies like Xceptance. This ensures that the valuable cooperation between schools, science, and business continues to enrich the technical education of young people and that fun and learning don’t exclude each other.

About witelo e.V.

Witelo is the network of science and technology learning sites in Jena. The goal of its work is to get children and young people excited about STEM subjects, i.e. mathematics, computer science, natural sciences and technology. A broad alliance of city, business, and institutions supports witelo – the work of the association is financed by supporters and sponsors.

You can find further information on the numerous offers and activities on the witelo website.

There certainly are things out there that money can’t buy, just like the Beatles once sang. However, you might agree with us that money can do good (things), if managed appropriately.

There certainly are things out there that money can’t buy, just like the Beatles once sang. However, you might agree with us that money can do good (things), if managed appropriately.