TL;DR: Neodymium is a Java-based test library for web automation that utilizes existing libraries (Selenide, WebDriver, Allure, JUnit, Maven) and concepts (localization, test multiplication, page objects) and adds missing components such as test data handling, starter templates, multi-device handling, and other small but useful everyday helpers.

Motivation

As a company focused on quality assurance and testing, Xceptance always needs test automation software, especially end-to-end automation software. Several years ago we built a Firefox add-on that was designed to create and run browser automation. The tool was primarily used by people who didn’t necessarily have a strong background in software development. Today, the landscape is a bit different: Mozilla cut the cord on the APIs we were using and standard programming languages have largely taken over test automation because they are more flexible and less proprietary. These changes convinced us it was time to implement an idea we had already hatched, namely our own Open Source test automation project: Neodymium. It is written in and utilizes the Java platform, it is MIT licensed, and of course you will find it on GitHub: https://github.com/Xceptance/neodymium-library

Basis

There are many libraries out there to aid web automation in Java, so developers are faced with the task of choosing ones they like and somehow making them work together. On top of that, there are tasks that require some custom code to work properly. We identified the overall tooling problem mostly as a hurdle in getting started and setting up a project. Finally, there are always things missing such as test data handling, concurrency, and common patterns which you don’t want to have to develop yourself. We chose JUnit, Selenide, WebDriver, Maven, and Allure for the base tooling.

Selenide provides an easy-to-use API to control Selenium WebDriver. Allure offers good mechanics to generate useful reports based on the assertions and actions you perform throughout your test cases. Maven is used to set up the build and execution environment for our framework and all the test projects. We decided to use JUnit as the test runner since it is the de facto standard in the Java world, but we enhanced the capabilities of JUnit to do even more. At its heart, Neodymium is a JUnit runner that wraps default JUnit behavior and adds significant useful functionality to it.

Multiple Browsers

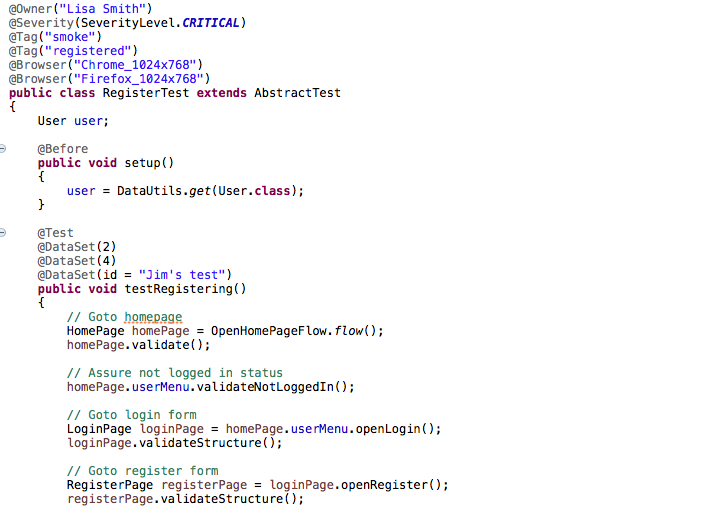

You want to be able to run the same tests for different resolutions and/or browsers to simulate the browsers most common among your users. Additionally, you need to be able to implement small differences within your test execution to address variants such as responsive designs or progressive web apps. So we added a way to run web browsers with different configurations and retrieve the current device type and resolution from within the test.

Neodymium provides a Java annotation that can be added to your test case, in order to run different browser setups. Neodymium is very flexible in configuring browsers, allowing you to fully leverage the Chrome device emulation offerings.

Test Data

Another common task is the execution of a test case with different data sets, such as testing address forms with all the relevant variations. The basic idea is to have test data and data sets in structured files next to your code, preferably as JSON, XML, property style, or simply CSV. Hence, we introduced an easy-to-use API to access the current data set and retrieve basic types from it. Furthermore, you can configure specific scenarios running only a subset or even no data set at all by adding proper annotations. To complete the picture, Neodymium supports test data on a global and package-level scope.

Localization

Another recurring topic in modern software projects is localization. Most of the web sites that are in need of test automation also support several locales. We decided to provide an out-of-the-box solution.

Neodymium’s localization feature makes use of a central translation file written in YAML format. YAML helps to structure the translations. Additionally, we implemented a simple way to override specific translations for different locales. The localized text can be easily retrieved using Neodymium API methods that are globally available.

Development Support

As it is essential to understand what your test is doing, we added a feature that enables you to slow down the test execution and highlight elements that match the current selector. Since you can chain selectors using Selenide, any chain of elements is also represented by the highlighting. With this feature activated, a developer can track down the cause of test failures much more easily. In addition, we provide information on how to set up logging in your project should you need that. Finally, we decided to use the Page Object pattern to organize the website-related code to reduce the maintenance effort and increase reusability.

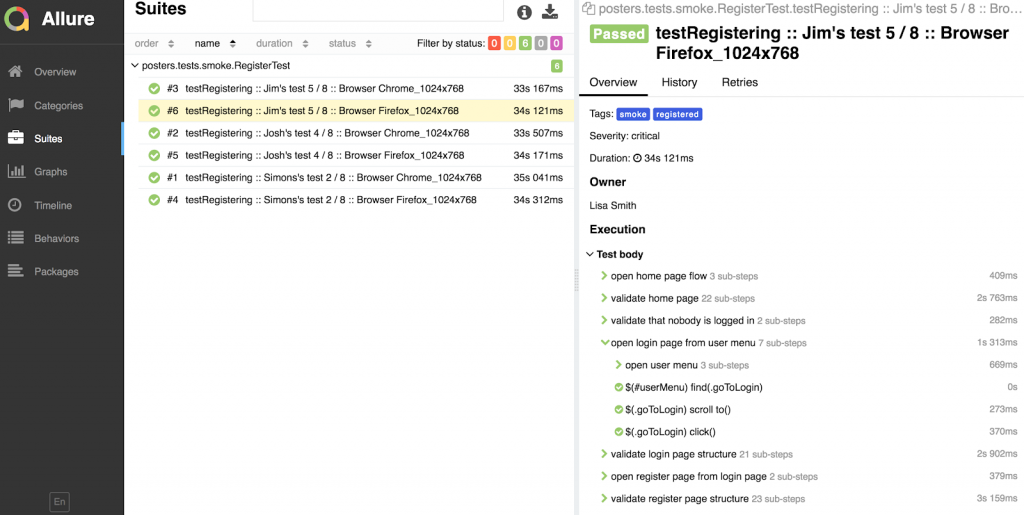

Reporting

Allure is a widely used framework to generate reports. When using Neodymium with Selenide, your automation code also contributes report information. Your test classes and methods are listed as well as detailed Selenide automation commands. In case of errors, additional details such as screenshots and source code of the page in question are available. Neodymium also provides means to structure code blocks for reporting purposes.

Continuous Integration

Implementing principles of continuous integration will deliver more reliable software by increasing efficiency, and automation is nothing without a continuous integration environment. Yet in almost every development cycle you will eventually end up needing varied settings due to differences in your setup, which can get complicated. Neodymium provides support for extra configuration files during development to override the standard production settings as needed. Furthermore, the framework supports overriding properties that change the configuration of your test execution by setting environment variables or simply passing Java arguments.

Because automation is supposed to run quickly, Neodymium provides support for parallel test execution and also demonstrates that setup as part of the sample test suite.

Documentation and Templates

Does Neodymium address some of your test automation challenges? Does it sound like a good entry point for your test automation?

Neodymium is hosted on GitHub (https://github.com/Xceptance/neodymium-library), where the accompanying project wiki (https://github.com/Xceptance/neodymium-library/wiki) provides extensive documentation to help you get started and answer your questions.

You might also want to take a look at the comprehensive example projects using Neodymium with Cucumber (https://github.com/Xceptance/neodymium-cucumber-example) or plain Java (https://github.com/Xceptance/neodymium-example). We’ve even provided a template project (https://github.com/Xceptance/neodymium-template) to get you started automating in no time.

License

Neodymium is licensed under the MIT License.

Who Are We

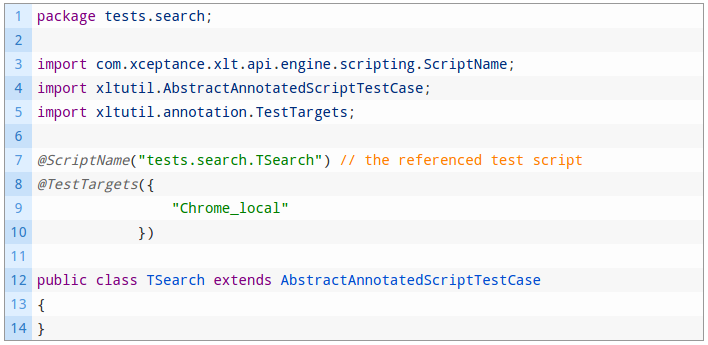

We are Xceptance. A software testing company with strong commerce knowledge and projects with customers from all around the world. Besides Neodymium, we have developed Xceptance Load Test (XLT), a load and performance test tool that is available free of charge and features an extensive range of awesome features to make the tester’s and developer’s life easier.

If you are looking for test automation that also covers the performance side of life, take a look at XLT. You can write and run load tests with real browsers including access to data from the Web Performance Timing API. In case browsers are too heavy, XLT has other modes of load testing to offer as well.

We offer professional support for Neodymium as well as implementation and training services.